In this post we review a variety of elementary functions often seen in the study of machine learning / deep learning, well as various ways of these combining elementary functions to create an un-ending array of interesting and complicated functions with known equations. These elementary functions are used extensively throughout not only the study of machine learning / deep learning, but throughout many areas of science in general.

# run this cell to import all necessary libraries for the notebook experiments

import sys

sys.path.append('../../')

from mlrefined_libraries import basics_library as baslib

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

Polynomial functions are perhaps the first set of elementary functions with known equations one learns about. They are simple- mathematical objects - when we are dealing with only one input $x$ each polynomial function simply raises the input to a given power. For example, the first three polynomial functions look like

\begin{equation} f_1(x) = x^1, ~~ f_2(x) = x^2,~~ f_3(x)=x^3 \end{equation}Notice how the first element here, written as $f_1(x) = x^1$ (often written just as $f_1(x) = x$, ignoring the superscript $1$), is a simple line with slope one and zero intercept - and the second, $f_2(x) = x^2$, a simple parabola. We can go on as well listing more polynomials, one for each positive whole number with the $m^{th}$ polynomial taking the form $f_m(x) = x^m$. Because of this indexing of powers the polynomials naturally form a catalog or family of functions. So there are an infinite number of polynomials - one for each positive whole number $1,2,3,4,....$.

Using their equations we can graph a sample of table values for the first few non-constant polynomial elements - which we do in the next Python cell.

# plot the first few polynomials

baslib.basics_plotter.poly_2d_plotter()

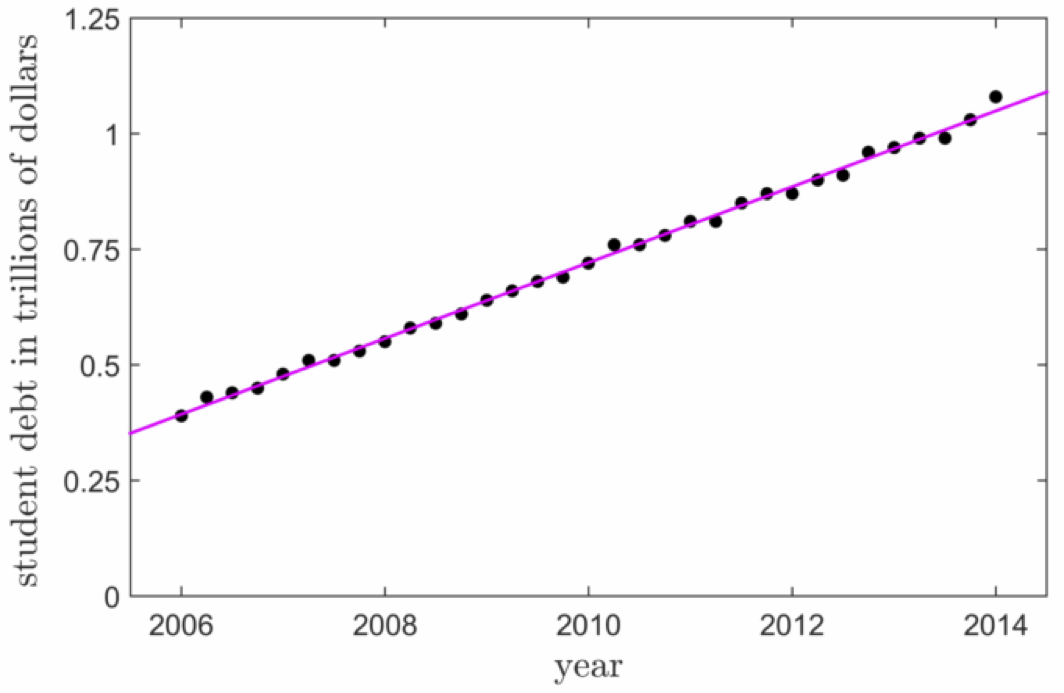

The degree one polynomial - otherwise known as a line - is one of the most common models used for low dimensional data fitting. Take for example the plot shown below taken from Machine Learning Refined - showing total student loan debt (money students borrow to pay for university fees and tuition) held in the United States. Measured quarterly from 2006 to 2014 the overall trend of the data - upward and onward to well over one trillion U.S. dollars - is quite linear, hence a line (a degree one polynomial) models this data quite well, allowing one to make accurate predictions about its future value.

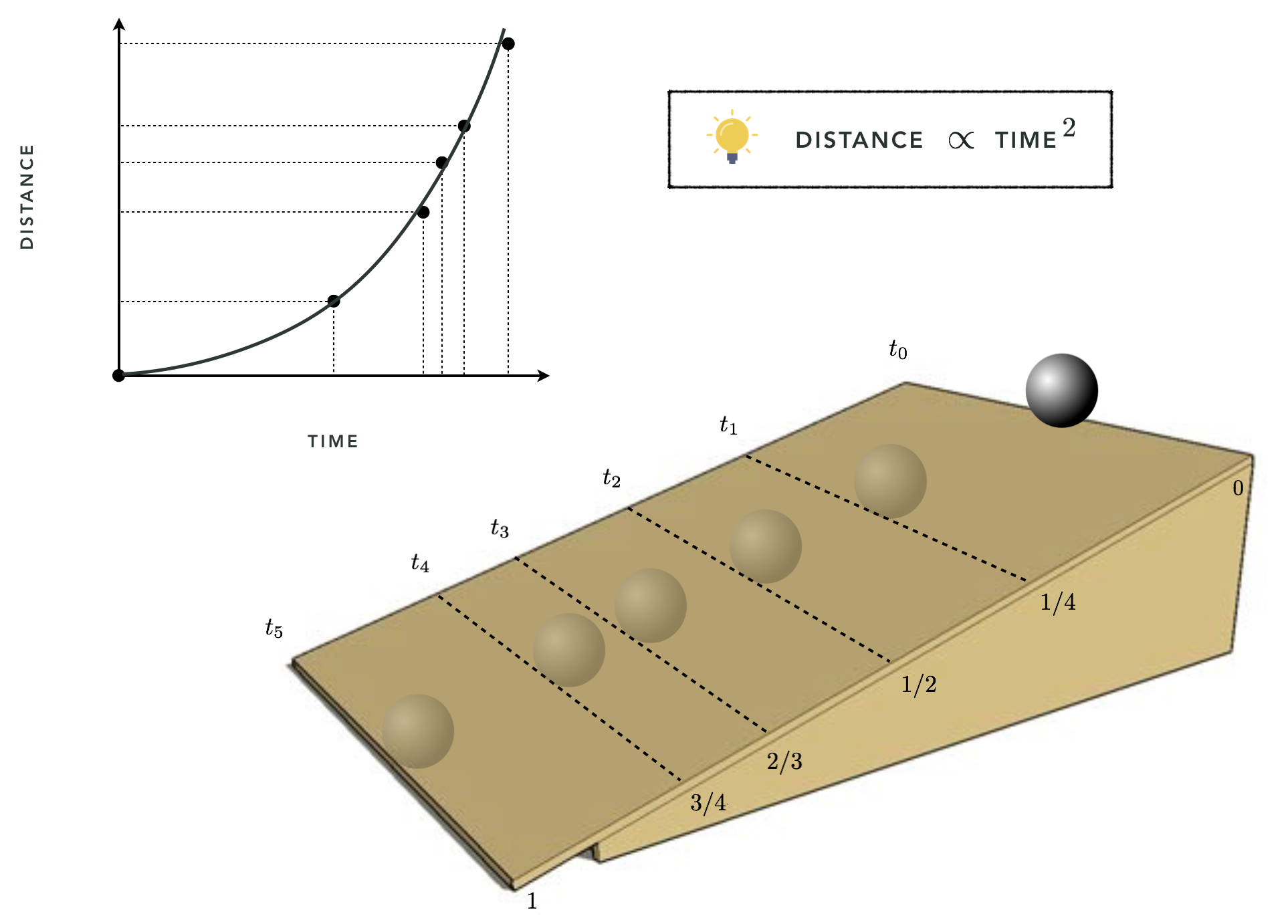

In 1638 Galileo Galilei, infamous for his expulsion from the Catholic church for daring to claim that the earth orbited the sun and not the converse (as was the prevailing belief at the time) published his final book: Discourses and Mathematical Demonstrations Relating to Two New Sciences. In this book, written as a discourse among three men in the tradition of Aristotle, he described his experimental and philosophical evidence for the notion of uniformly accelerated physical motion. Specifically, Galileo (and others) had intuition that the acceleration of an object due to (the force we now know as) gravity is uniform in time, or in other words that the distance an object falls is directly proportional (i.e., linearly related) to the amount of time it has been traveling, squared - perfectly modeled by a weighted degree two polynomial. This relationship was empirically solidified using the following ingeniously simple experiment performed by Galileo.

Repeatedly rolling a metal ball down a grooved 5 and 1/2 meters long piece of wood set at an incline - shown figuratively in the plot below taken from Machine Learning Refined - Galileo timed how long the ball took to get 1/4,1/2, 2/3, 3/4, and all the way down the wood ramp.

When we have two inputs - $x_1$ and $x_2$ - we also have a catalog of polynomial elements - polynomial functions with known equations. However now that we have two input elements, the form of each polynomial involves raising both inputs to a power and multiplying the result. The first three polynomial equations look like this

$$f_1(x_1,x_2) = x_1, ~~ f_2(x_1,x_2) = x_2, ~~ f_3(x_1,x_2) = x_1x_2, ~~ f_4(x_1,x_2) = x_1^{~}x_2^2$$Basically to create these polynomials of two inputs we pick two nonnegative whole numbers - say $p$ and $q$ - then we make a polynomial in each single input $x_1^p$ and $x_2^q$, and multiply the result to g together giving $f(x_1,x_2) = x_1^px_2^q$. Once again since we can list off an infinite number of nonnegative integer pairs $(0,1), (1,0), (1,1), (1,2),...$ and there is a polynomial for each such pair there are infinite polynomial elements here.

We plot a few of these polynomials taking in two elements over a range of input values in the Python cell.

# plot the first few 3d polynomials

baslib.basics_plotter.poly_3d_plotter()

In general we can make polynomials taking in $N$ (e.g., $N=10$) inputs $x_1,\,x_2,\,...\,x_N$ by following the same pattern we saw for two inputs. That is, we pick a set of $N$ nonnegative integers like $(2,10,1,...,23,14)$, raise the each input variable to its corresponding number - for example $x_1^2$, $x_2^{10}$, $x_3^{1}$,...,$x_{N-1}^{23}$, $x_N^{14}$ - and multiply these terms together forming the polynomial element $f(x_1,x_2,...,x_{N-1},x_N) = x_1^{2} x_2^{10} x_3^1 \cdots x_{N-1}^{23}x_N^{14}$

Reciprocal functions look quite similar to polynomials - instead of raising an input to a positive integer, we raise them to a negative one. The first three such functions look like

\begin{equation} f_1(x) = x^{-1} = \frac{1}{x}\,\,, ~~ f_2(x) = x^{-2} = \frac{1}{x^2}\,\,,~~ f_3(x)=x^{-3} = \frac{1}{x^3}\,\, \end{equation}In general, raising an input to the power $n$ means $x^{-n} = \frac{1}{x^n}$ Several examples of reciprocal functions are plotted by the Python cell below.

# plot first few reciprocal functions

baslib.basics_plotter.recip_plotter()

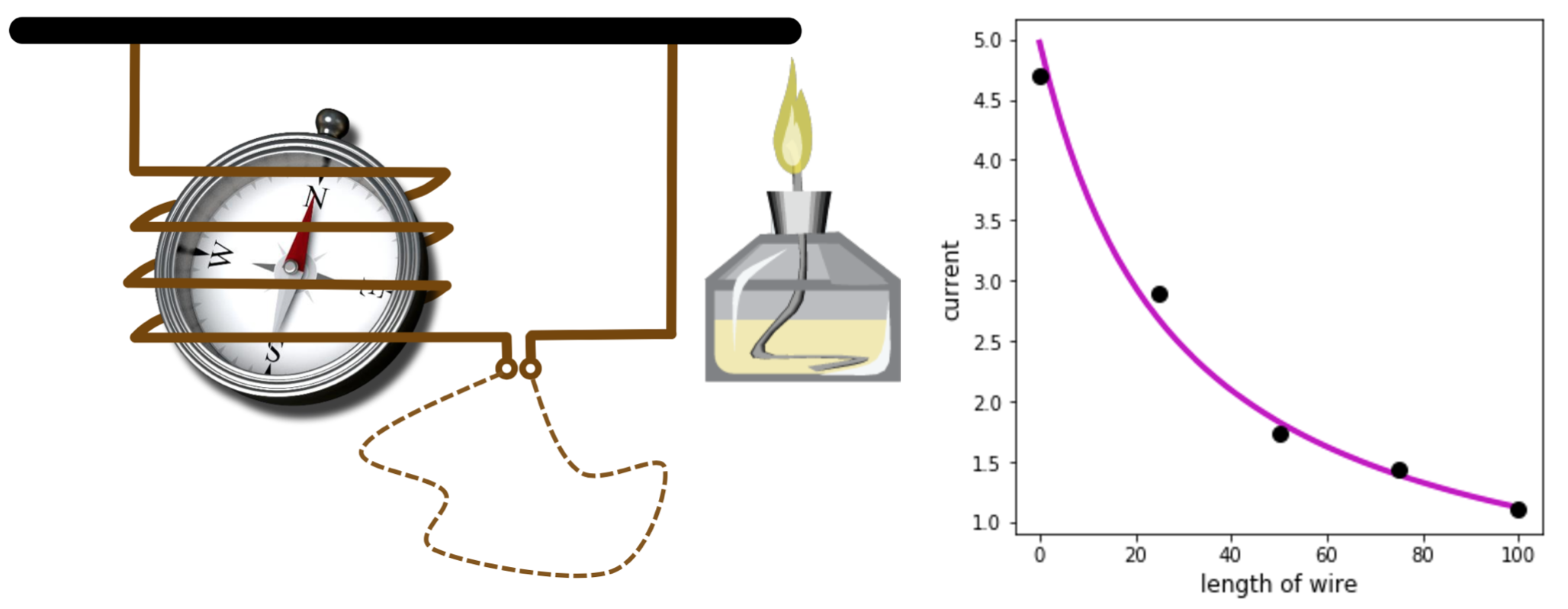

Ohm’s law, proposed by the German physicist Georg Simon Ohm following a series of experiments made by him in the 1820s, connects the magnitude of the current in a galvanic circuit to the sum of all the exciting forces in the circuit, as well as the length of the circuit. Although he did not publish any account of his experimental results, it is easy to verify his law using a simple experimental setup, shown in the left panel below, that is very similar to what he then utilized. This image is taken from Machine Learning Refined. The spirit lamp heats up the circuit, generating an electromotive force which creates a current in the coil deflecting the needle of the compass. The tangent of the deflection angle is directly proportional to the magnitude of the current passing through the circuit. The magnitude of this current is inversely proportionally to the length of the wire used to close the circuit (dashed curve). In the right panel below we show the readings of the current (in terms of the tangent of the deflection angle) for 5 different lengths (in cm) of the closing wire.

The basic trigonometric elementary functions are derived from the simple relations of a right triangle, and take on a wavy and repeating (or periodic) shape. The first of these are the sine and cosine functions. These two functions that originate in tracking the width and height of single point on a unit circle

\begin{equation} x^2 + y^2 = 1 \end{equation}as it smoothly moves counterclockwise around - as illustrated in the animation produced by the following Python cell. Here the point on the unit circle is pointed too using an arrow stemming from the origin.

# create an animation showing the origin of the sine and cosine functions

baslib.trig_hyper_visualizer.sin_cos(num_frames=200)