Here we discuss an often used variation of the original perceptron, called the margin perceptron, that is once again based on analyzing the geometry of the classification problem where a line (or hyperplane in higher dimensions) is used to separate two classes of data. We then build on this fundamental concept to derive support vector machines, a popular method used for linear classification. While this approach provides interesting insight into the two-class classification process, we will eventually see that support vector machines do not greatly differ fundamentally from logistic regression (or, likewise, the perceptron).

# imports from custom library

import sys

sys.path.append('../../')

import matplotlib.pyplot as plt

from mlrefined_libraries import superlearn_library as superlearn

import autograd.numpy as np

import pandas as pd

%matplotlib notebook

# this is needed to compensate for %matplotlib notebook's tendancy to blow up images when plotted inline

from matplotlib import rcParams

rcParams['figure.autolayout'] = True

%load_ext autoreload

%autoreload 2

In this Section we derive support vector machines based on the notion of a margin-perceptron, a close relative to the perceptron approach described in the previous post. For perfectly linearly separable datasets the support vector machine provides a uniquely different hyperplane than that of logistic regression or the perceptron. Nonetheless, because perfectly linearly separable datasets are the exception rather than the rule, we will see that support vector machines in general do not provide a considerably different decision boundary than the one provided by logistic regression or the perceptron.

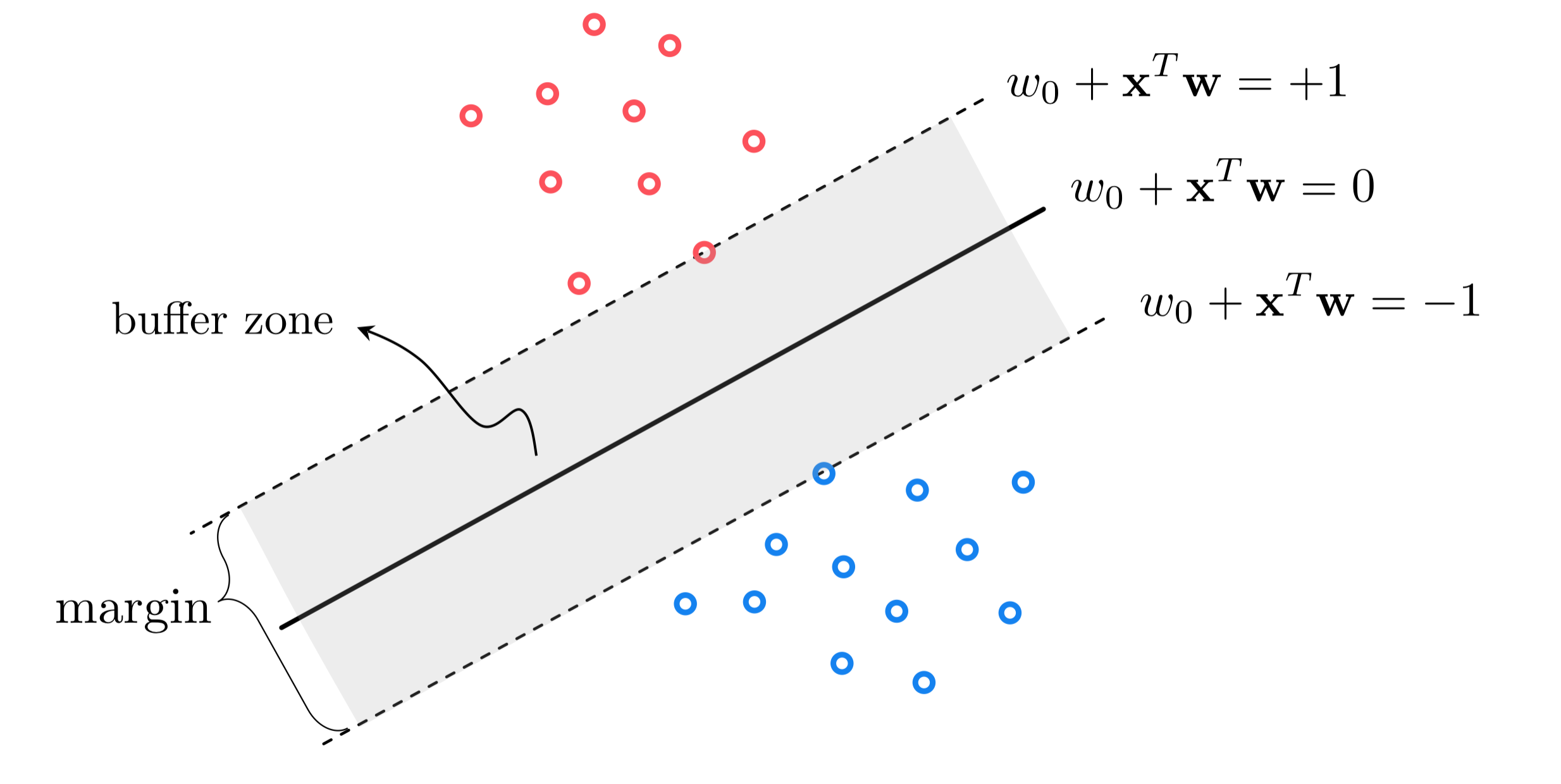

Suppose once again that we have a two-class classification training dataset of $P$ points $\left\{ \left(\mathbf{x}_{p},y_{p}\right)\right\} _{p=1}^{P}$ with the labels $y_p \in \{-1,+1\}$. Suppose for the time being that we are dealing with a two-class dataset that is perfectly linearly separable with a known linear decision boundary $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=0$ passing evenly between the two classes as illustrated in the figure below.

This separating hyperplane creates a buffer between the two classes confined between two evenly shifted versions of itself: one version that lies on the positive side of the separator and just touches the class having labels $y_{p}=+1$ taking the form $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=+1$, and one lying on the negative side of it just touching the class with labels $y_{p}=-1$ taking the form $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=-1$. The translations above and below the separating hyperplane are more generally defined as $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=+\beta^{\,}$ and $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=-\beta^{\,}$ respectively, where $\beta>0$. However by dividing off $\beta$ in both equations and reassigning the variables as $w_0\longleftarrow\frac{w_0}{\beta}$ and $\mathbf{w}\longleftarrow\frac{\mathbf{w}}{\beta}$, we can leave out the redundant parameter $\beta$ and have the two translations as stated $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=\pm1$.

The fact that all points in the $+1$ class lie exactly on or on the positive side of $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=+1$, and all points in the $-1$ class lie exactly on or on the negative side of $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=-1$ can be written formally as the following conditions

We can combine these conditions into a single statement by multiplying each by their respective label values, giving the single inequality $y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)\geq1$ which can be equivalently written as

\begin{equation} \mbox{max}\left(0,\,1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)\right)=0 \end{equation}Again, this value is always nonnegative. Summing up all $P$ equations of the form above gives the margin-perceptron

Notice the striking similarity between the original perceptron cost from the previous post and the margin perceptron cost above: naively we have just 'added a $1$' to the non-zero input of the max function in each summand. However this additional $1$ prevents the issue of a trivial zero solution with the original perceptron discussed previously, which simply does not arise here.

If the data is indeed linearly separable any hyperplane passing between the two classes will have parameters $w_0$ and $\mathbf{w}$ where $g\left(w_0, \mathbf{w}\right)=0$. However the margin perceptron is still a valid cost function even if the data is not linearly separable. The only difference is that with such a dataset we can not make the criteria above hold for all points in the dataset. Thus a violation for the $p^{\textrm{th}}$ point adds the positive value of $1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)$ to the cost function.

In this example we use (unnormalized) gradient descent to minimize the margin-perceptron cost function. Note however that, similar to what we saw in Example 1 of our post on the perceptron, the magnitude of the full cost function's gradient will not necessarily diminish to zero eventually, but will stay fixed (in magnitude) based on the dataset. This again motivates the use of a diminishing steplength.

In the next Python cell we load in a dataset originally introduced in our post on logistic regression.

# load in dataset

data = np.loadtxt('../../mlrefined_datasets/superlearn_datasets/3d_classification_data_v0.csv',delimiter = ',')

# define the input and output of our dataset - assuming arbitrary N > 2 here

x = data[:,:-1]

y = data[:,-1]

y.shape = (len(y),1)

Next, we implement the margin-perceptron cost function in Python.

# the relu cost function

def margin_perceptron(w):

cost = 0

for p in range(0,len(y)):

x_p = x[p]

y_p = y[p]

a_p = w[0] + sum([a*b for a,b in zip(w[1:],x_p)])

cost += np.maximum(0,1-y_p*a_p)

return cost

Then, we make a gradient descent run of 50 steps, randomly initialized, with a diminishing steplength value $\alpha = \frac{1}{k}$ where $k$ is the step number.

# declare an instance of our current our optimizers

opt = superlearn.optimimzers.MyOptimizers()

# run desired algo with initial point, max number of iterations, etc.,

w_hist = opt.gradient_descent(g = margin_perceptron,w = np.random.randn(np.shape(x)[1]+1,1),max_its = 50,alpha = 10**-2,steplength_rule = 'diminishing')

Finally, we plot the dataset and the learned decision boundary $w_0^{\star}+\mathbf{x}^{T}\mathbf{w}^{\star} = 0 $ (right panel) as well as the logistic surface $\text{tanh}\left(w_0^{\star}+\mathbf{x}^{T}\mathbf{w}^{\star}\right)$ fit to the data in 3-dimensions (left panel).

# create instance of 3d demos

demo5 = superlearn.classification_3d_demos.Visualizer(data)

# draw the final results

demo5.static_fig(w_hist,view = [15,-140])

Modeling and optimization go hand-in-hand. If we have modeled something well but the associated cost function is difficult to minimize it can be more expedient to re-fashion the cost, even if we lose considerable modeling precision, if the new cost function is considerably easier to optimize. Here 'easier to optimize' could mean a number of things - perhaps a steplength parameter is less sensitive (meaning a larger range of potential values will provide good results), or a broader set of optimization tools can be brought to bear. The opposite holds as well - a cost function that is easy to minimize but models no useful phenomenon is a mere curiosity.

Here we find ourselves in-between these two extremes, with a cost function that can be slightly adjusted to enable a broader set of optimization tools (including a larger range of steplength values for gradient descent, as well as Newton's method) while losing only a small portion of its original shape. As with the perceptron, one way to smooth out the margin-perceptron here is by replacing max with softmax. Doing so in one summand of the margin-perceptron gives the related summand

Right away if we were to sum over all $P$ we could form a softmax-based cost function that closely matches the margin-perceptron. But note how in the derivation of the margin perceptron the '1' used in the $1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)$ component of the cost could have been any number we wanted - it was a normalization factor for the width of the margin and, by convention, we used '1'.

Instead we could have chosen any value $\epsilon > 0$ in which case the set of $P$ conditions stated in equation (2) would be equivalently stated as

\begin{equation} \mbox{max}\left(0,\,\epsilon-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)\right)=0 \end{equation}for all $p$ and the margin-perceptron equivalently stated as

\begin{equation} g\left(w_0,\mathbf{w}\right)=\underset{p=1}{\overset{P}{\sum}}\mbox{max}\left(0,\,\epsilon-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)\right) \end{equation}and the softmax version of one summand here being

\begin{equation} \text{soft}\left(0,\epsilon-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)\right) = \text{log}\left( 1 + e^{\epsilon-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)}\right) \end{equation}When $\epsilon$ is quite small we of course have that $\text{log}\left( 1 + e^{\epsilon-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)}\right) \approx \text{log}\left( 1 + e^{-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)}\right)$, the same summand used for the (smoothed) perceptron and logistic regression. Thus we can in fact use the same softmax cost function here

\begin{equation} g\left(w_0,\mathbf{w}\right)=\underset{p=1}{\overset{P}{\sum}}\text{log}\left( 1 + e^{-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right)}\right) \end{equation}as a smoothed version of our margin-perceptron cost.

From the perspective of the standard perceptron this realization is somewhat redundant - a decision boundary recovered via the perceptron on a linearly separable dataset will almost always have a non-zero margin. So this just says its smoothed version will do the same.

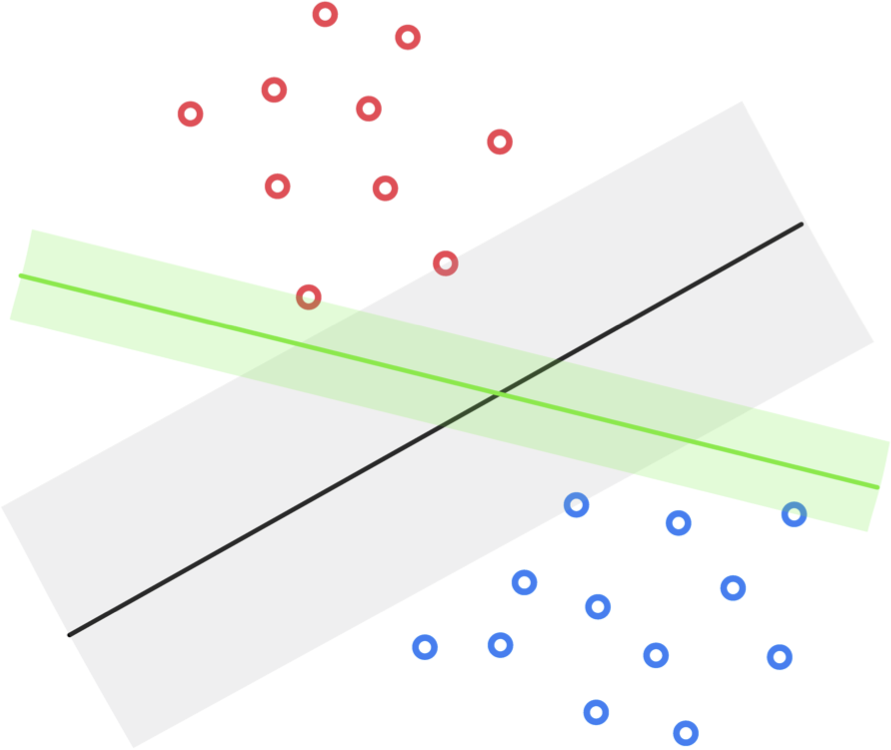

When two classes of data are linearly separable infinitely many hyperplanes could be drawn to separate the data. In the figure below we display two such hyperplanes for a given synthetic dataset. Given that both classifiers (as well as any other decision boundary perfectly separating the data) would perfectly classify the data, is there one that we can say is the 'best' of all possible separating hyperplanes?

One reasonable standard for judging the quality of these hyperplanes is via their margin lengths, that is the distance between the evenly spaced translates that just touch each class. The larger this distance is the intuitively better the associated hyperplane separates the entire space given the particular distribution of the data. This idea is illustrated pictorially in the figure below.

To find the separating hyperplane with maximum margin, remember that the margin of a particular hyperplane $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=0$ is the width of the buffer zone confined between two symmetric translations of itself, written conveniently as $w_0+\mathbf{x}^{T}\mathbf{w}^{\,}=\pm1$, each just touching one of the two classes.

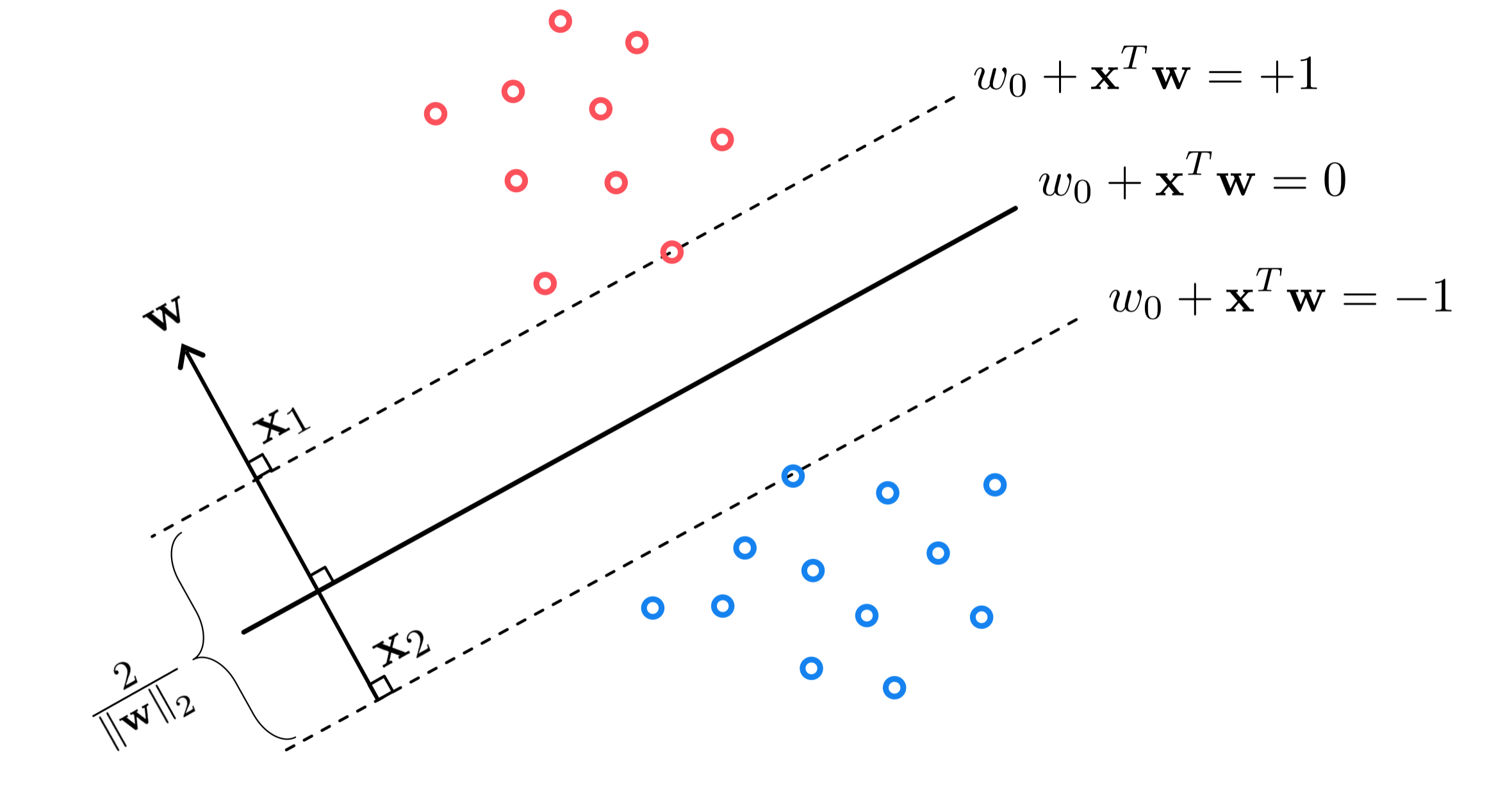

As shown in the figure below the margin can be determined by calculating the distance between any two points (one from each translated hyperplane) both lying on the normal vector $\mathbf{w}$. Denoting by $\mathbf{x}_{1}$ and $\mathbf{x}_{2}$ the points on vector $\mathbf{w}$ belonging to the positive and negative translated hyperplanes, respectively, the margin is computed simply as the length of the line segment connecting $\mathbf{x}_{1}$ and $\mathbf{x}_{2}$, i.e., $\left\Vert \mathbf{x}_{1}-\mathbf{x}_{2}\right\Vert _{2}$.

The margin can be written much more conveniently by taking the difference of the two translates evaluated at $\mathbf{x}_{1}$ and $\mathbf{x}_{2}$ respectively, as

\begin{equation} \left(w_0^{\,} + \mathbf{x}_{1}^T\mathbf{w}^{\,}\right) - \left(w_0^{\,} + \mathbf{x}_{2}^T\mathbf{w}^{\,}\right) =\left(\mathbf{x}_{1}^{\,}-\mathbf{x}_{2}^{\,}\right)^{T}\mathbf{w}^{\,}=2 \end{equation}Using the inner product rule, and the fact that the two vectors $\mathbf{x}_{1}-\mathbf{x}_{2}$ and $\mathbf{w}$ are parallel to each other, we can solve for the margin directly in terms of $\mathbf{w}$, as

\begin{equation} \left\Vert \mathbf{x}_{1}-\mathbf{x}_{2} \right\Vert _2=\frac{2}{\left\Vert \mathbf{w}\right\Vert _{2}} \end{equation}Therefore finding the separating hyperplane with maximum margin is equivalent to finding the one with the smallest possible normal vector $\mathbf{w}$.

In order to find a separating hyperplane for the data with minimum length normal vector we can simply combine our desire to minimize $\left\Vert \mathbf{w}\right\Vert _{2}^{2}$ subject to the constraint (defined by the margin-perceptron) that the hyperplane perfectly separates the data (given by the margin criterion described above). This gives the so-called hard-margin support vector machine problem

The set of constraints here are precisely the margin-perceptron guarantee that the hyperplane we recover separates the data perfectly. Unlike some of the other constrained optimization problems we have previously discussed (see e.g., those described in our post on general steepest descent methods in our series on mathematical optimization) this cannot be solved in closed form, but must be optimized iteratively.

While there are constrained optimization algorithms that are designed to solve problems like this as stated, we can also solve the hard-margin problem by relaxing the constraints and forming an unconstrained formulation of the problem (to which we can apply familiar algorithmic methods to minimize). To do this we merely bring the constraints up, forming a single cost function

\begin{equation} g\left(w_0,\mathbf{w}\right)=\underset{p=1}{\overset{P}{\sum}}\text{max}\left(0,\,1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right) \right) + \lambda \left \Vert\mathbf{w}\right\Vert _{2}^{2} \end{equation}to be minimized. Here the parameter $\lambda\geq0$ is called a penalty or regularization parameter. When $\lambda$ is set to a small positive value we put more 'pressure' on the cost function to make sure the constraints

\begin{equation} \text{max}\left(0,\,1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right) \right)=0,\,\,\,\,p=1,...,P \end{equation}indeed hold, and (in theory) when $\lambda$ is made very small the formulation above matches the original constrained form. Because of this $\lambda$ is often set to be quite small in practice.

This regularized form of the margin-perceptron cost function is referred to as the soft-margin support vector machine cost. One added value of this formulation is that it allows us to apply SVMs to datasets that are not completely linearly separable which - as mentioned previously in our post on logistic regression - is far more common in practice. When a dataset is not perfectly linearly separable this means that regardless of the weights $w_0$ and $\mathbf{w}$, we always have some data point which is misclassified, i.e., for some $p$ that $\text{max}\left(0,\,1-y_{p}^{\,}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}^{\,}\right) \right)>0$. Such a case makes the first hard-margin formulation of SVMs infeasible, as no such $w_0$ and $\mathbf{w}$ even exist that satisfy the constraints. This is not an issue for the soft-margin case since we are trying to minimize the margin-perceptron directly.

In this example we compare the support vector machine decision boundary learned to three boundaries learned via the margin-perceptron. All of the recovered boundaries perfectly separate the two classes, but the support vector machine decision boundary is the one that provides the maximum margin (right panel) while those recovered by the margin-perceptron (left panel, each colored differently) can ostensibly be any of the infinitely many linear decision boundaries that separate the two classes.

# load in dataset

data = np.loadtxt('../../mlrefined_datasets/superlearn_datasets/svm_demo_data.csv',delimiter = ',')

# define the input and output of our dataset - assuming arbitrary N > 2 here

x = data[:,:-1]

y = data[:,-1]

y.shape = (len(y),1)

# create instance of svm comparision demo

demo5 = superlearn.svm_margin_demo.Visualizer(data)

# run all

demo5.svm_comparison() # compute linear decision boundaries

demo5.svm_comparison_fig() # plot linear decision boundaries

Note how in the right panel the margins pass through points from both classes - equidistant from the SVM linear decision boundary. These points are called support vectors, hence the name support vector machine.

Notice how to produce a smoothed version of the soft-margin cost all we must do is smooth out the margin-perceptron, which as discussed previously can be done by replacing it with the softmax cost (used with the original perceptron as well as logistic regression). This gives a smoothed out soft-margin cost function of the form

\begin{equation} g\left(w_0,\mathbf{w}\right)=\underset{p=1}{\overset{P}{\sum}}\text{log}\left( 1 + e^{-y_{p}\left(w_0+\mathbf{x}_{p}^{T}\mathbf{w}\right)}\right) + \lambda \left \Vert\mathbf{w}\right\Vert _{2}^{2} \end{equation}Having seen logistic regression and the original perceptron in the previous two posts, we can interpret this as a lightly regularized version of either approach. We say lightly because while we set $\lambda$ to quite a small positive value in practice, it is important - at least on datasets that are indeed linearly separable - that $\lambda \neq 0$ exactly. We need to penalize the magnitude of $\mathbf{w}$ - as doing this guides us towards the decision boundary of maximum margin.

The output of the next Python cell shows the soft-margin hyperplane learned for the toy dataset in the previous example, using the regularized logistic regression/soft-margin support vector machine cost function. We will see that minimizing this cost function correctly indeed recovers a linear decision boundary of maximum margin for this dataset.

# load in dataset

data = np.loadtxt('../../mlrefined_datasets/superlearn_datasets/svm_demo_data.csv',delimiter = ',')

# define the input and output of our dataset - assuming arbitrary N > 2 here

x = data[:,:-1]

y = data[:,-1]

y.shape = (len(y),1)

With our dataset loaded, lets implement the smoothed soft-margin support vector machine cost.

# the relu cost function

lam = 10**(-3)

def softmargin_svm(w):

cost = 0

for p in range(0,len(y)):

x_p = x[p]

y_p = y[p]

a_p = w[0] + sum([a*b for a,b in zip(w[1:],x_p)])

cost += np.log(1 + np.exp(-y_p*a_p))+ lam*np.dot(w[1:].T,w[1:])

return cost

With our cost defined, we can minimize it over the dataset as in the next Python cell.

# declare an instance of our current our optimizers

opt = superlearn.optimimzers.MyOptimizers()

# minimize input cost via Newton's method

w_hist = opt.newtons_method(g = softmargin_svm,w = np.random.randn(np.shape(x)[1]+1,1),max_its = 10,epsilon = 10**-8)

Now in the next Python cell we plot the final decision boundary learned via the optimization above, along with its margin hyperplanes. As we see it is indeed the decision boundary with maximum margin.

# Create a demonstration instance

demo5 = superlearn.svm_margin_demo.Visualizer(data)

# draw the final results

demo5.softmargin_fig(w_hist)

Which approach - logistic regression, the perceptron, or support vector machines - is the best two-class classification scheme? Nothing we have seen so far seems to indicate one cost function's superiority over the other in general. In fact, the various geometric derivations given so far have shown how all three are intimately related via the softmax cost function - in a very real sense all three approaches lead to the same end result. In practice one approach may work better than the others in specific instances, which is why one often runs two or all three approaches in practice, computational resources permitting. But it should come as little surprise that in practice all three approaches tend to perform quite similarly overall (in terms of classification accuracy).